Analysing Experimental Results With R

May 27, 2014 § 2 Comments

In this post, I will briefly show an analysis of the results of the experimental design I created earlier:

Creating an Experimental Design in R

The design concerns the interactions between storage protocol, iops, read%, rand% and block size for IO in VMWare. The effect under analysis is the CPU utilization of the ESX kernel. The motivation for this is discussed here:

NFS vs Fibre Channel: Comparing CPU Utilization in VMWare

I load the previous experimental design, having added a response column:

load( “V:/Doe/Design.1.rda” )

Design.1.withresp <- add.response(Design.1,

“V:/Doe/Design.1.with_response.csv”, replace=FALSE)

Now, apply linear regression and summarize the results:

LinearModel.1 <- lm(cpu ~ (read + rand + blk_sz + protocol + iops)^2,

data=Design.1.withresp)

summary(LinearModel.1)

This produces the following table:

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.256812 0.018130 179.632 < 2e-16 ***

read1 -0.074656 0.018130 -4.118 4.34e-05 ***

rand1 -0.001125 0.018130 -0.062 0.95054

blk_sz1 0.040906 0.018130 2.256 0.02440 *

protocol1 0.608219 0.018130 33.547 < 2e-16 ***

iops1 1.032375 0.018130 56.942 < 2e-16 ***

read1:rand1 -0.016969 0.018130 -0.936 0.34967

read1:blk_sz1 -0.018875 0.018130 -1.041 0.29825

read1:protocol1 -0.006219 0.018130 -0.343 0.73171

read1:iops1 -0.110219 0.018130 -6.079 2.10e-09 ***

rand1:blk_sz1 -0.017750 0.018130 -0.979 0.32795

rand1:protocol1 -0.002937 0.018130 -0.162 0.87134

rand1:iops1 0.005656 0.018130 0.312 0.75516

blk_sz1:protocol1 0.062063 0.018130 3.423 0.00066 ***

blk_sz1:iops1 0.026219 0.018130 1.446 0.14865

protocol1:iops1 0.369719 0.018130 20.392 < 2e-16 ***

—

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ‘ 1

Residual standard error: 0.4587 on 624 degrees of freedom

Multiple R-squared: 0.8862, Adjusted R-squared: 0.8835

F-statistic: 324 on 15 and 624 DF, p-value: < 2.2e-16

The most immediately useful column is the estimate of the coefficient. If we assume that the CPU utilization is given by an equation of the form:

In this case, we see that ,

and so on for all the coefficients.

Meanwhile, each of the factors is normalized so that the low value corresponds to -1, and the high value to +1. So, we have:

Where protocol is -1 in the case of fibre channel and +1 in the case of NFS.

As load increases, we expect the CPU utilization to be dominated by effects involving relationships with IOPS. That is to say, if without the terms not involving IOPS:

So, we can simplify things be ignoring all effects not involving IOPS, giving the following formula for CPU utilization:

The terms involving interactions with rand and block size clearly have relatively little impact, so I discard them with minimal loss in precision.

In the original experiment, the CPU utilization was calculated for 8 cores. Normalizing for a single core, we have:

It is now also possible to approximate the CPU cost of NFS over fibre channel:

With the minimum difference for write IO, and the maximum for read.

So, in this experiment, NFS is found to be of the order of twice as expensive as fibre channel.

Creating an Experimental Design in R

May 27, 2014 § 2 Comments

Using the DoE package of R, I create the following experimental design:

Design.1 <- fac.design(nfactors= 5 ,replications= 20 ,repeat.only= FALSE ,blocks= 1 ,

randomize= TRUE ,seed= 25027 ,nlevels=c( 2,2,2,2,2 ), factor.names=list( read=c(0,100),

rand=c(0,100),blk_sz=c(4,8),protocol=c(‘”fc”‘,'”nfs”‘),iops=c(1000,4000) ) )

The design can then be exported for later use:

export.design(Design.1, type=”all”,path=”V:/Doe”, file=”Design.1″,

replace=FALSE)

I use this design to analyse the issues raised in this post:

here:

NFS vs Fibre Channel: Comparing CPU Utilization in VMWare

May 2, 2014 § 3 Comments

SUMMARY

Some years ago we were faced with the choice of which storage network protocol to use in our virtualized environment. Central to the discussion was a white paper, co-authored by Netapp and VMWare, comparing throughput and CPU utilization for NFS, FC and iSCSI. Broadly speaking the paper concluded that the differences in throughput were trivial, and for CPU utilization were under most circumstances small. At the time I wasn’t satisfied with the document, or indeed the conclusions that were able to drawn from it.

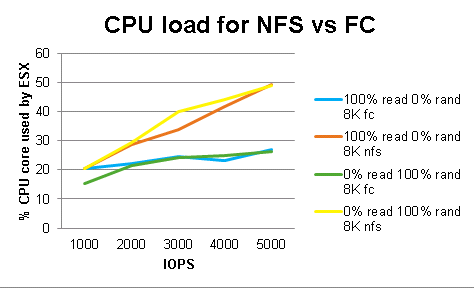

In this post, I will outline some work I performed recently using Queuing Theory1 and Design of Experiments2 to draw more specific conclusions from a broadly similar set of experiments to those undertaken by NetApp. I show that, if we restrict our analysis to storage efficiency in the hypervisor, in fact the choice of protocol is the dominant influence on CPU load, after IOPS (as can clearly be seen below in figure 4), and that under some circumstances, where latency of the order of 100μs is significant, or the total volume of IO is large, the choice of protocol can be an important determinant of performance, and potentially cost.

LIMITATIONS OF THE NETAPP PAPER

The white paper in question is NetApp document TR-36973. The equipment is more than a little out of date now, ESX 3.5, GigE, NetApp 2000 and 3000 series boxes and 2Gb FC-AL fibre. They ran 4K and 8K random and sequential workloads at various read/write ratios and at a range of thread counts. Tests were run to compare workloads over NFS, FC and iSCSI. For the purpose of this analysis, I ignore iSCSI and concentrate on NFS and FC.

In making use of this paper some issues become apparent:

1. All experimental results are scaled with respect to FC. This makes it hard to get a sense of what the result means in real terms, or to compare results between experiments.

2. The IOPS aren’t fixed between, or within, experiments. If response times increase, the IOPS, and hence the throughput, will tend to drop. This is observed in the results with NFS producing somewhat less throughput than FC under increased load.

If the IOPS are suppressed for NFS, then we might expect this also to keep the CPU utilization down, since there are fewer IOs for the CPU to process per unit of time. Despite this, the CPU is working harder for NFS.

3. By throttling based on the thread count, they are implicitly assuming closed queuing. This is not necessarily applicable to modeling transactional workloads.

4. Due to caching and interaction with the filesystem, it isn’t clear what IO is being processed by the VMWare IO subsystem. This will tend to minimize differences in the performance of the underlying infrastructure, since it is only being used a proportion of the time.

To progress with the analysis, fresh data is needed.

STEP 1: Test Configuration

I set up a small test configuration to run a similar set of experiments to those in the paper, though this time just comparing NFS and FC. There are some significant differences between the setup NetApp used and the one I built. Partly that is because technology has moved on since 2008, but most of the changes are because I have simplified the setup as far as possible.

The physical setup was as follows:

Figure 1

I ran a single minimally configured Debian guest running the 3.2.0-4-686-pae kernel inside an ESXi 5.5 (1331820) hypervisor. I configured a disk from an NFS datastore, and one from an FC datastore. The guest was assigned 8 vCPU and 1GB of memory.

STEP 2: Workload Generation

In their test, NetApp used Iometer4 which throttles the workload based on thread count. Most freely available tools operate along the same lines as Iometer. To achieve the required level of control, I modified NetApp’s sio_ntap5 to hold a specified level of IOPS. A more detailed description of this work can be found here:

https://ascknd.com/2014/05/28/616/

I ran sio_ntap with the directio option set, bypassing cache and directed to a raw device. By doing this, different factors that directly influency the intensity of the workload can be compared and their effect quantified.

STEP 3: Experimental Design

NetApp consider a number of factors in their analysis – read%, random%, block size, protocol and thread count. I’m going to substitute IOPS for thread count. The response we are interested in is the CPU used by the ESX kernel (). To calculate this we use the Physical Cpu(_Total)\% Util Time field from ESX top, minus the sum of the %CPU user and system from top on the debian guest. This gives the amount of work ESX is doing independent of the guest.

In order to formulate a model of this system, it is necessary to make some assumptions:

1. An IO of any given type will take a fixed amount of CPU resources regardless of load.

1.1 We therefore expect to see all effects to involve a linear interaction with IOPS. This can readily be validated by plotting a graph showing the percentage of a CPU core used by the ESX kernel, as discussed above, for various workloads and IOPS:

Figure 3

It can readily be seen that NFS and FC diverge with plausibly linear behavior.

1.2 For any given read percent, or random percent, an IO is either read or write, random or sequential. We therefore expect their effect to be linear.

2. Since we are only interested in block sizes of 4 or 8, we will only consider linear effects of block size. No claims are made about applicability of the model to larger block sizes

3. Some background load will exist. This is assumed to be the load for zero IOPS. This will be deducted from all subsequent calculations for CPU load. Taking this into account, the previous graph can be amended so that the average CPU utilization at zero IOPS is zero:

Figure 4

All effects are therefore expected to be linear, to involve interactions with IOPS and go through the origin.

The following design will be used:

| read% | rand% | blk_sz | protocol | iops |

| 0 | 0 | 4 | fc | 1000 |

| 100 | 100 | 8 | nfs | 4000 |

Table 1

This gives a full factorial experimental design with 32 experiments. Repeating every experiment 20 times, 640 runs are needed.

| run | read% | rand% | blk_sz | protocol | iops |

| 1 | 0 | 100 | 4 | fc | 4000 |

| 2 | 0 | 0 | 8 | fc | 1000 |

| <snip> | |||||

| 639 | 0 | 0 | 4 | fc | 4000 |

| 640 | 0 | 0 | 8 | nfs | 1000 |

Table 2

A more detailed discussion on how to create the design in R is shown here:

We now need to run the modified sio_ntap tool with the above parameters 640 times and analyze the results.

STEP 4: Analysis of Results

R6 is used with the DoE7 package to analyze the results. This solves the experimental results as a system of simultaneous equation. A detailed explanation of this is presented here:

The analysis shows that we can approximate the CPU utilization, by the following equation for large IOPS:

Equation 1

Which implies that NFS is approximately 1.96 times as expensive in terms of CPU utilization as Fibre Channel (see linked article).

STEP 5: Real World Context

Whether this difference in the cost of NFS and FC IO is important, or not, depends on the extent to which it has a significant impact on macro level system characteristics that relate to the financial cost, and feasibility, of delivering on design requirements.

Solving equation 1 for a single IOP, we see that one NFS IO uses % of a core, which implies each IO is using 81μs of CPU time compared to 41μs for fibre channel. The behaviour of the CPUs under load can be modelled as an M/M/8 queue8. We see that, as one would expect for a multiprocessor system, it copes well:

| response(secs) | util_% |

| 0.000081 | 0 |

| 0.000081 | 20 |

| 0.000081 | 40 |

| 0.000085 | 60 |

| 0.000104 | 80 |

Table 3

A typical midrange disk subsystem can turn around an IO in about 500μs. Some higher end subsystems can respond in 150μs, or less. So this is an effect that is of the same order of magnitude as other metrics that clearly can determine system performance.

These individual IOs add up of course. In this example, one CPU core can handle ~12K NFS IOPS, or 24K FC IOPS. This potentially has architectural and cost implications if a significant intensity of IO is being serviced. A 70K IOP requirement would require a 6 cores for NFS, but only 3 for FC, purely processing the IO in the hypervisor.

CONCLUSIONS

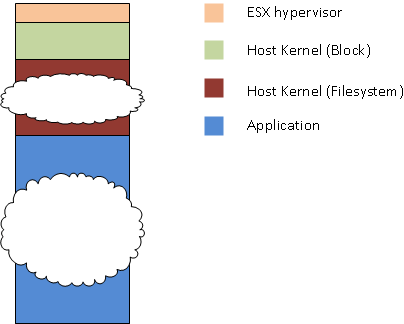

While NFS clearly induces more load on the ESX server than FC, to some degree these costs need to be seen in context. The reason the NetApp paper found a relatively small difference between the protocols is that there are many other factors contribute to the overall CPU load associated with doing IO. Often the cost within the hypervisor will be relatively insignificant:

Figure 5

Whether the hypervisor is important depends on the interaction between the workload and each of these other elements. Analysing these interactions is a larger problem and not trivial to address in the general case.

1The Art of Computer Systems Performance Analysis, p.507 R. Jain, John Wiley & Sons Inc, 1991

2The Art of Computer Systems Performance Analysis, p.293 R. Jain, John Wiley & Sons Inc, 1991

3 Performance Report: Multiprotocol Performance Test of VMware® ESX 3.5 on NetApp Storage Systems, https://communities.vmware.com/servlet/JiveServlet/download/1085778-15260/NetApp_PerformanceTest_tr-3697.pdf, Jack McLeod, NetApp, June 2008

4 http://www.iometer.org/

5 https://communities.netapp.com/blogs/zurich/2011/01/24/sio–performance-load-testing-simplified

6 http://cran.r-project.org/

7 http://prof.beuth-hochschule.de/groemping/software/design-of-experiments/project-industrial-doe-in-r/

8 https://ascknd.com/2014/05/15/442/

Document Changes:

27/05/2014 – Updated experimental design with 20 replications instead of 5 and simplified the setup of the block devices. All equations and graphs modified to agree with new data. Some additional minor cosmetic changes and corrections.